Can generative AI generate completely new knowledge?

“AI is going to take over the world”. Probably, you have heard it too many times. Recently, with the development of generative AI, this thought have flourished even more among general public. But I do not think so. I do not think AI will ever takeover the world. Most generative AI used Natural Language Processing (NLP) models, which have been out there for several decades, only that their application was not to easy to mass population as it is now with the advent of ChatGPT.

I have been using machine learning for almost a decade now in research, teaching, and business. AI can only only perform as good as the training dataset on which the model parameters are trained, the ability of the model, and the human that creates the application of the AI. It can never do anything completely new. For example, it can never invest a new alternative source of energy, only humans can.

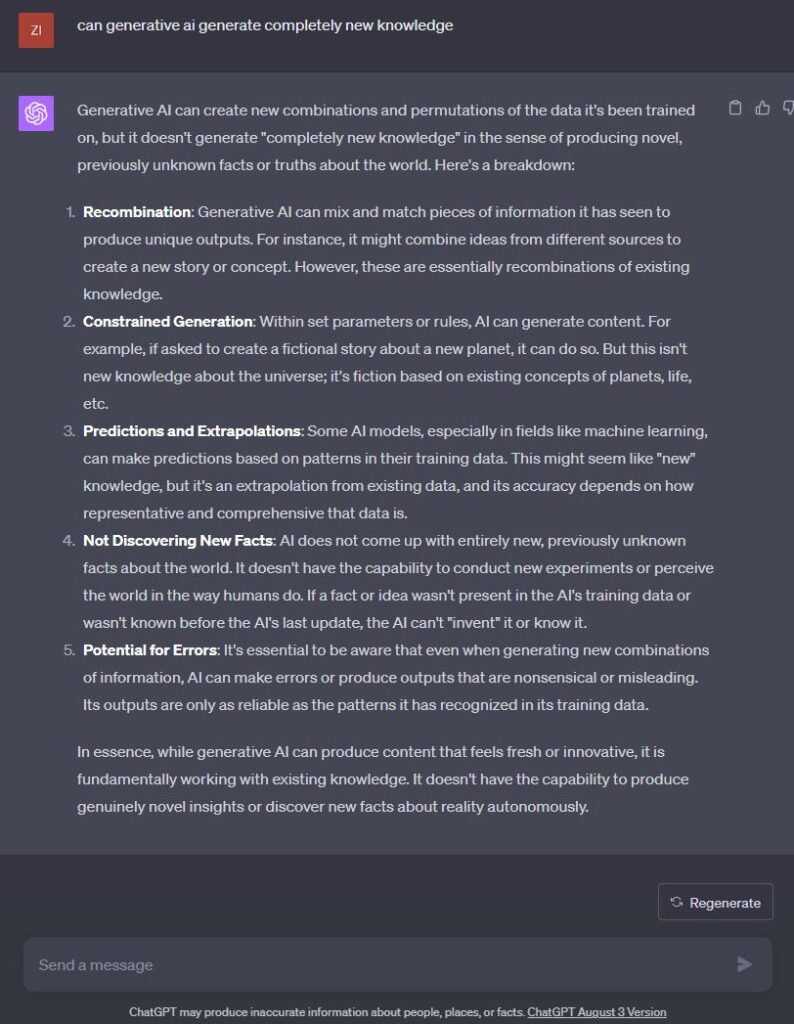

To run my e-learning platform www.researchhub.org, I have been using a team of people to manage the website, still it has been hard to keep the website running smoothly. An AI cannot even build and run smoothly a website without humans in the loop. Anyway, to further justify my points and present them in a systematic way, I asked ChatGPT 4: Can generative AI generate completely new knowledge? The following is the response I got.

Generative AI can create new combinations and permutations of the data it’s been trained on, but it doesn’t generate “completely new knowledge” in the sense of producing novel, previously unknown facts or truths about the world. Here’s a breakdown:

- Recombination: Generative AI can mix and match pieces of information it has seen to produce unique outputs. For instance, it might combine ideas from different sources to create a new story or concept. However, these are essentially recombinations of existing knowledge.

- Constrained Generation: Within set parameters or rules, AI can generate content. For example, if asked to create a fictional story about a new planet, it can do so. But this isn’t new knowledge about the universe; it’s fiction based on existing concepts of planets, life, etc.

- Predictions and Extrapolations: Some AI models, especially in fields like machine learning, can make predictions based on patterns in their training data. This might seem like “new” knowledge, but it’s an extrapolation from existing data, and its accuracy depends on how representative and comprehensive that data is.

- Not Discovering New Facts: AI does not come up with entirely new, previously unknown facts about the world. It doesn’t have the capability to conduct new experiments or perceive the world in the way humans do. If a fact or idea wasn’t present in the AI’s training data or wasn’t known before the AI’s last update, the AI can’t “invent” it or know it.

- Potential for Errors: It’s essential to be aware that even when generating new combinations of information, AI can make errors or produce outputs that are nonsensical or misleading. Its outputs are only as reliable as the patterns it has recognized in its training data.

In essence, while generative AI can produce content that feels fresh or innovative, it is fundamentally working with existing knowledge. It doesn’t have the capability to produce genuinely novel insights or discover new facts about reality autonomously.

P.S. Part of the text was produced using ChatGPT-4, and the cover image using deepai.org.